Integrating a Java REST API With a Database

Using MongoDB and Docker Compose

Introduction

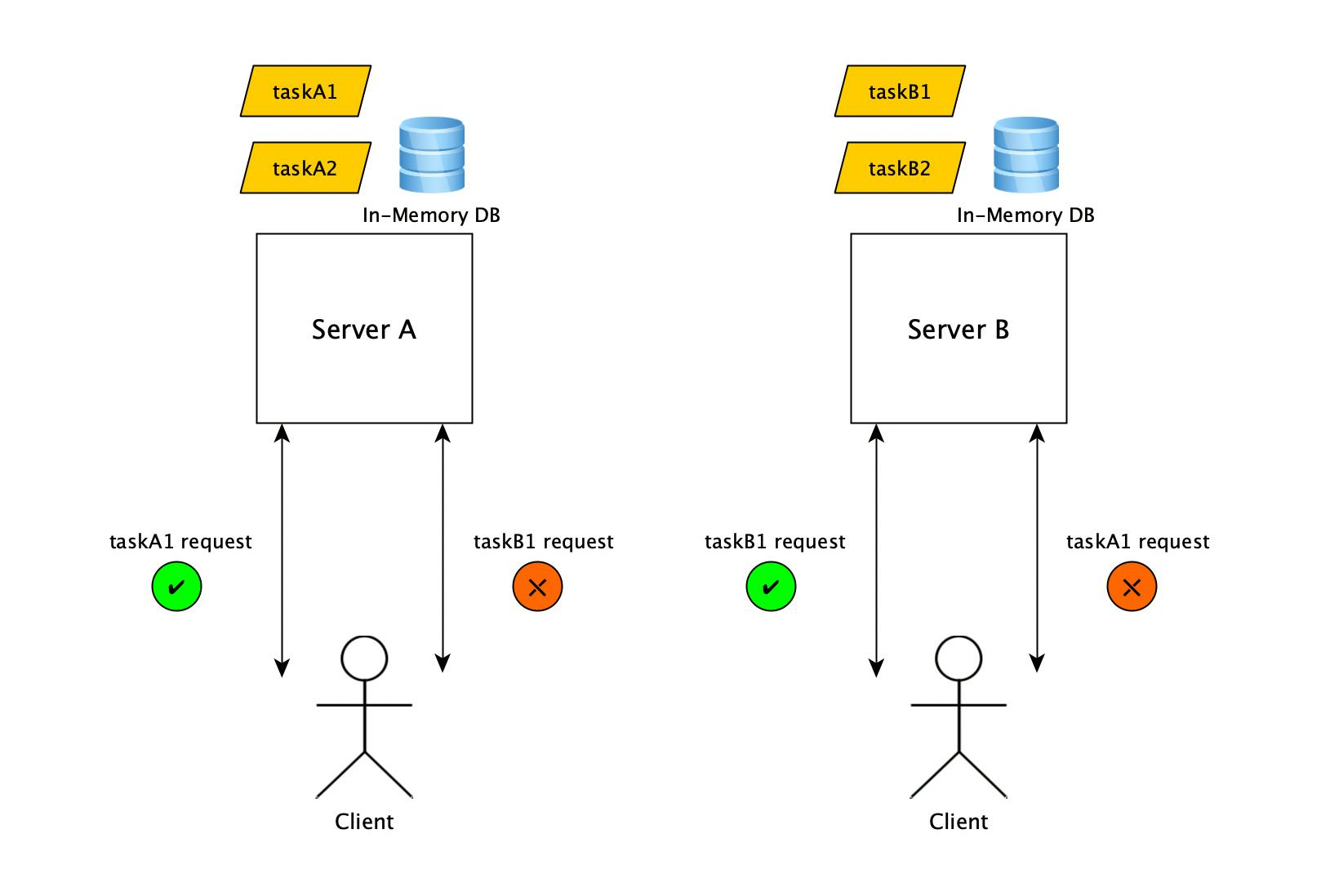

This article is a follow-up to my last tutorial on building a fully functional Java REST API for managing TODO tasks. For simplicity, last time we used an in-memory database as an implementation of the storage interface defined by our business logic. It is clear that this solution is not feasible for a production-ready API mainly because:

we will lose the stored tasks every time we stop the application

different application instances will not be able to share memory - in other words, if we horizontally scale up our service by using two servers - A and B, then tasks created through server A will not be seen by tasks created through server B.

This is illustrated in the diagram below:

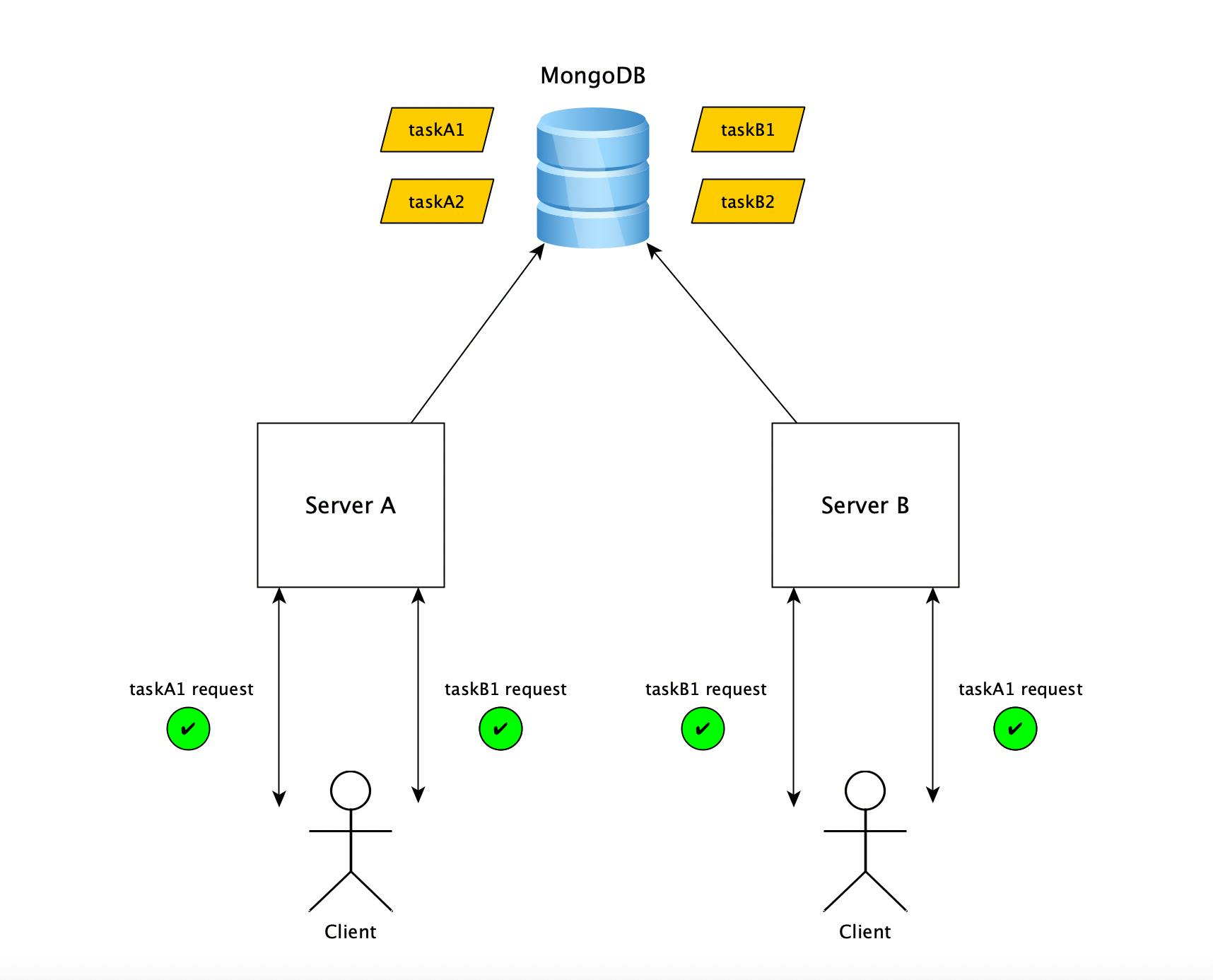

In this tutorial I want to demonstrate how we can integrate the API we built as part of the previous article with a very famous open-source non-relational database, namely MongoDB. This means changing the diagram above to the following:

For the purpose of simplicity, I chose MongoDB, but technically we could have also chosen a relational database such as PostgreSQL. If we were to choose between the two DBs, we would need to know a bit more about the software requirements of our service - things like:

scalability - how much is the application expected to grow in the future

expected traffic pattern - how many clients are going to use the service, what request rate they will use, read vs write ratio, etc.

access patterns - do we have a well-defined access pattern requested by our business stakeholders or do we need an approach that allows for general search queries

The API specification is not enough on its own to make this decision, but for the purpose of this tutorial, we can go ahead and use MongoDB.

Current State

A quick reminder of what we built before is given below:

- a repository interface defining the functionality our business logic requires for storing and retrieving tasks

public interface TaskManagementRepository {

void save(Task task);

List<Task> getAll();

Optional<Task> get(String taskID);

void delete(String taskID);

}

- an in-memory DB implementation of the repository interface that uses a hash map to store and retrieve tasks

public class InMemoryTaskManagementRepository implements TaskManagementRepository {

private Map<String, Task> tasks = new HashMap<>();

@Override

public void save(Task task) {

tasks.put(task.getIdentifier(), task);

}

@Override

public List<Task> getAll() {

return tasks.values().stream()

.collect(Collectors.toUnmodifiableList());

}

@Override

public Optional<Task> get(String taskID) {

return Optional.ofNullable(tasks.get(taskID));

}

@Override

public void delete(String taskID) {

tasks.remove(taskID);

}

}

- a Guice binding that ensures every piece of business logic that needs to use a TaskManagementRepository instance will use the same InMemoryTaskManagementRepository instance

public class ApplicationModule extends AbstractModule {

@Override

public void configure() {

bind(TaskManagementRepository.class).to(InMemoryTaskManagementRepository.class).in(Singleton.class);

}

}

Target State

The goal of this tutorial is to implement a new class called MongoDBTaskManagementRepository which will be an implementation of the repository interface that uses MongoDB for persistent storage.

We are going to have the DB running as a separate Docker container. Therefore, we will now have an application made of two containers - the service container and the DB container. We will use Docker Compose to manage the multi-container application as a single entity. Keep in mind that this is not a Docker-focused tutorial, so the focus won't be on explaining best practices for setting up a database with Docker or how to use Docker and Docker Compose in general. Feel free to leave a comment if you would like to see a separate article for this.

Database Integration

MongoDB Container Setup

We start by pulling a MongoDB Docker image and setting up our Docker Compose file to start two containers - one for the service itself and one for a MongoDB instance:

docker pull mongo:5.0.9

The Docker Compose file is given below. Notice that we mount a persistent volume to the MongoDB container since we don't want the data to be wiped out whenever the container crashes or is rebuilt). What this means is that any data stored in /data/db on the MongoDB container will be stored under data/mongo (relative to the project root folder) on your laptop. Keep in mind that /data/db is where the MongoDB container stores data by default.

version: "3.9"

services:

mongo:

image: "mongo:5.0.9"

volumes:

- .data/mongo:/data/db

webapp:

build: .

depends_on:

- "mongo"

ports:

- "8080:8080"

To start the application, we run docker-compose up --build -d which effectively does three things:

creates a container running the MongoDB image - using the default MongoDB port 27017 for connections

re-builds the image for our REST API and creates a container running the service - the container will have port 8080 exposed so that you can connect from your laptop to the API

creates a virtual network connecting the two containers

To stop the application and clean up the resources (containers and network), run docker-compose down.

The full commit for this step can be found here.

Repository Interface Implementation

Now that we have a MongoDB instance running, we need to create a new class - MongoDBTaskManagementRepository - that will implement the repository interface.

public class MongoDBTaskManagementRepository implements TaskManagementRepository {

private final MongoCollection<MongoDBTask> tasksCollection;

@Inject

public MongoDBTaskManagementRepository(MongoCollection<MongoDBTask> tasksCollection) {

this.tasksCollection = tasksCollection;

}

@Override

public void save(Task task) {

MongoDBTask mongoDBTask = toMongoDBTask(task);

ReplaceOptions replaceOptions = new ReplaceOptions()

.upsert(true);

tasksCollection.replaceOne(eq("_id", task.getIdentifier()), mongoDBTask, replaceOptions);

}

@Override

public List<Task> getAll() {

FindIterable<MongoDBTask> mongoDBTasks = tasksCollection.find();

List<Task> tasks = new ArrayList<>();

for (MongoDBTask mongoDBTask: mongoDBTasks) {

tasks.add(fromMongoDBTask(mongoDBTask));

}

return tasks;

}

@Override

public Optional<Task> get(String taskID) {

Optional<MongoDBTask> mongoDBTask = Optional.ofNullable(

tasksCollection.find(eq("_id", taskID)).first());

return mongoDBTask.map(this::fromMongoDBTask);

}

@Override

public void delete(String taskID) {

Document taskIDFilter = new Document("_id", taskID);

tasksCollection.deleteOne(taskIDFilter);

}

private Task fromMongoDBTask(MongoDBTask mongoDBTask) {

return Task.builder(mongoDBTask.getTitle(), mongoDBTask.getDescription())

.withIdentifier(mongoDBTask.getIdentifier())

.withCompleted(mongoDBTask.isCompleted())

.withCreatedAt(Instant.ofEpochMilli(mongoDBTask.getCreatedAt()))

.build();

}

private MongoDBTask toMongoDBTask(Task task) {

MongoDBTask mongoDBTask = new MongoDBTask();

mongoDBTask.setIdentifier(task.getIdentifier());

mongoDBTask.setTitle(task.getTitle());

mongoDBTask.setDescription(task.getDescription());

mongoDBTask.setCreatedAt(task.getCreatedAt().toEpochMilli());

mongoDBTask.setCompleted(task.isCompleted());

return mongoDBTask;

}

private static class MongoDBTask {

@BsonProperty("_id")

private String identifier;

private String title;

private String description;

@BsonProperty("created_at")

private long createdAt;

private boolean completed;

...

}

...

}

Given that this is not a MongoDB-specific tutorial, I skipped a few implementation details around the Mongo DB Java driver, but in essence, we are using a very basic implementation that maps the internal MongoDBTask POJO to a Mongo DB document. For more details, see the official tutorial.

The full commit for this step can be found here.

Binding Everything Together

You might have noticed that the MongoDB repository implementation relies on a MongoCollection<MongoDBTask> instance to be injected. This class is an abstraction for interacting with an actual MongoDB collection. In this section, we will write the code for connecting to the database and binding an instance of this class. Since we are using Guice for dependency injection, we will encapsulate this logic into its own Guice module and then use the new module in the main application module.

public class MongoDBModule extends AbstractModule {

@Provides

private MongoCollection<MongoDBTaskManagementRepository.MongoDBTask> provideMongoCollection() {

ConnectionString connectionString = new ConnectionString(System.getenv("MongoDB_URI"));

CodecRegistry pojoCodecRegistry = fromProviders(PojoCodecProvider.builder().automatic(true).build());

CodecRegistry codecRegistry = fromRegistries(MongoClientSettings.getDefaultCodecRegistry(), pojoCodecRegistry);

MongoClientSettings mongoClientSettings = MongoClientSettings.builder()

.applyConnectionString(connectionString)

.codecRegistry(codecRegistry)

.build();

MongoClient mongoClient = MongoClients.create(mongoClientSettings);

MongoDatabase mongoDatabase = mongoClient.getDatabase("tasks_management_db");

return mongoDatabase.getCollection("tasks", MongoDBTaskManagementRepository.MongoDBTask.class);

}

}

To use this new module, we need to install it in our main application module and change the binding to use the MongoDB repository implementation:

public class ApplicationModule extends AbstractModule {

@Override

public void configure() {

bind(TaskManagementRepository.class).to(MongoDBTaskManagementRepository.class).in(Singleton.class);

install(new MongoDBModule());

}

}

You can see in the MongoDB module that we are using a database with name tasks_management_db and a collection with name tasks but we never actually created these. The reason is that MongoDB will automatically create both the database and the collection as soon as we insert the first document (when we create the first task through our API).

The final piece for binding everything together is to configure the MongoDB_URI environment variable used for connecting to the database in the task management service container. This can be done through the Docker Compose file:

version: "3.9"

services:

webapp:

...

environment:

- MongoDB_URI=mongodb://taskmanagementservice_mongo_1:27017

...

The URI is built based on the default port the MongoDB container uses and the default hostname convention Docker Compose uses when building containers.

The full commit for this step can be found here.

EDIT: After initially writing this blog post, docker-compose changed the default container naming convention which resulted in the MongoDB container being named taskmanagementservice-mongo-1 instead of taskmanagementservice_mongo_1. That's why an additional code change was added to ensure the container names are fixed and the deployment still works:

version: "3.9"

services:

mongo:

image: "mongo:5.0.9"

container_name: taskmanagementservice_mongodb

volumes:

- .data/mongo:/data/db

webapp:

build: .

container_name: taskmanagementservice_api

depends_on:

- "mongo"

environment:

- MongoDB_URI=mongodb://taskmanagementservice_mongodb:27017

ports:

- "8080:8080"

The full commit for this change can be found here.

Testing The Service

Before we start testing, let's re-build the full stack from scratch:

docker-compose down

docker-compose up --build -d

Now, both the service and MongoDB should be up and running. We will once again use curl to test the CRUD API (ideally these manual tests should be automated as integration tests but we will leave that for another article where we focus on testing):

- creating a few tasks

curl -i -X POST -H "Content-Type:application/json" -d "{\"title\": \"test-title\", \"description\":\"description\"}" "http://localhost:8080/api/tasks"

HTTP/1.1 201

Location: http://localhost:8080/api/tasks/cb06d6a1-960b-47eb-b44b-de0b01303020

Content-Length: 0

Date: Fri, 29 Jul 2022 09:20:25 GMT

curl -i -X POST -H "Content-Type:application/json" -d "{\"title\": \"test-title\", \"description\":\"description\"}" "http://localhost:8080/api/tasks"

HTTP/1.1 201

Location: http://localhost:8080/api/tasks/3c4c7a7d-b680-4be5-9dd4-51d02225a700

Content-Length: 0

Date: Fri, 29 Jul 2022 09:20:32 GMT

- retrieving a task

curl -i -X GET "http://localhost:8080/api/tasks/cb06d6a1-960b-47eb-b44b-de0b01303020"

HTTP/1.1 200

Content-Type: application/json

Content-Length: 159

Date: Fri, 29 Jul 2022 09:21:04 GMT

{"identifier":"cb06d6a1-960b-47eb-b44b-de0b01303020","title":"test-title","description":"description","createdAt":"2022-07-29T09:20:25.410Z","completed":false}

- retrieving a non-existing task

curl -i -X GET "http://localhost:8080/api/tasks/random-task-id-123"

HTTP/1.1 404

Content-Type: application/json

Content-Length: 81

Date: Fri, 29 Jul 2022 09:21:19 GMT

{"message":"Task with the given identifier cannot be found - random-task-id-123"}

- retrieving all tasks

curl -i -X GET "http://localhost:8080/api/tasks"

HTTP/1.1 200

Content-Type: application/json

Content-Length: 321

Date: Fri, 29 Jul 2022 09:21:30 GMT

[{"identifier":"cb06d6a1-960b-47eb-b44b-de0b01303020","title":"test-title","description":"description","createdAt":"2022-07-29T09:20:25.410Z","completed":false},{"identifier":"3c4c7a7d-b680-4be5-9dd4-51d02225a700","title":"test-title","description":"description","createdAt":"2022-07-29T09:20:32.851Z","completed":false}]

- deleting a task

curl -i -X DELETE "http://localhost:8080/api/tasks/3c4c7a7d-b680-4be5-9dd4-51d02225a700"

HTTP/1.1 204

Date: Fri, 29 Jul 2022 09:22:11 GMT

- patching a task

curl -i -X PATCH -H "Content-Type:application/json" -d "{\"completed\": true, \"title\": \"new-title\", \"description\":\"new-description\"}" "http://localhost:8080/api/tasks/cb06d6a1-960b-47eb-b44b-de0b01303020"

HTTP/1.1 200

Content-Length: 0

Date: Fri, 29 Jul 2022 09:22:34 GMT

So far, all the tests we executed look pretty much the same as the ones executed in the previous article. However, we know that previously, restarting the service implied losing the data. Let's try this now by rebuilding the full stack again:

docker-compose down

docker-compose up --build -d

curl -i -X GET "http://localhost:8080/api/tasks"

HTTP/1.1 200

Content-Type: application/json

Content-Length: 163

Date: Fri, 29 Jul 2022 09:27:25 GMT

[{"identifier":"cb06d6a1-960b-47eb-b44b-de0b01303020","title":"new-title","description":"new-description","createdAt":"2022-07-29T09:20:25.410Z","completed":true}]

As we can see, the data is now persistently stored - we still have the original task we initially created and then updated as part of testing.

Summary

In conclusion, what we've done as part of this tutorial is:

setup a MongoDB container

use Docker Compose to manage a multi-container application

integrate a Java REST API with a MongoDB database

The key point I was trying to show in this article was the fact that all we had to do to switch from an in-memory DB implementation to a proper DB technology was to create a new implementation of the repository interface, configure the connection to the DB inside a new guice module and then change one line in the already existing code so that our business logic will now use the new repository implementation.

We changed the guice binding from:

bind(TaskManagementRepository.class).to(InMemoryTaskManagementRepository.class).in(Singleton.class);

to:

bind(TaskManagementRepository.class).to(MongoDBTaskManagementRepository.class).in(Singleton.class);

We never touched any of the core business logic for our API and this is the beauty of clean architecture - we don't couple our code with the fact that we chose to persist our data in a database rather than in-memory. This is an implementation detail and changing our storage mechanism should not affect the rest of our code.